Handling historical bid-ask data

Build a Data Lake with Airflow and AWS

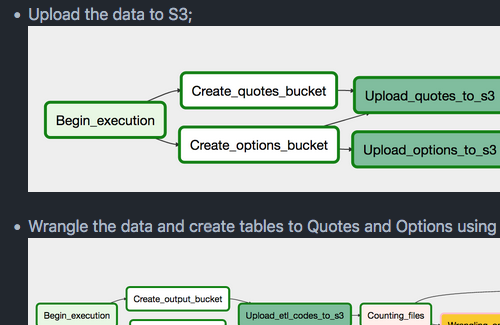

In this project, I manipulated historical bid/ask prices on Brazilian stocks and options to be used by other applications later. I used Airflow to automate the local data upload to S3 and an ETL pipeline to build and manage a data lake hosted on S3. I also included a Flask app that uses Dash to show off how to consume such data.

This project is connected to the Data Engineer Nanodegree, from Udacity. I used Python, Apache Spark and Airflow, AWS EMR, S3, and Athena, and Python packages Dash, Boto3, and Flask.