Sign Language Recognition System

Using HMM models

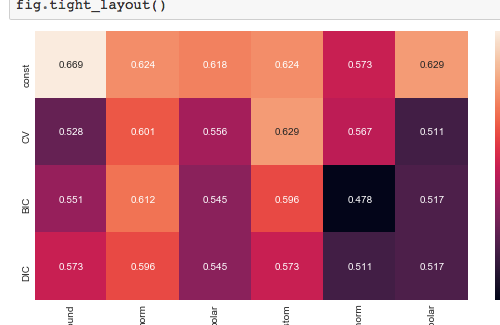

In this project, I built a system that can recognize words communicated using the American Sign Language (ASL). Then, I trained a set of Hidden Markov Models (HMMs) using part of a preprocessed dataset of tracked hand and nose positions extracted from video to try and identify individual words from test sequences. Finally, I experimented with model selection techniques including BIC, DIC, and K-fold Cross Validation.

I used Python to code this project and it is connected to the Artificial Intelligence Nanodegree program, from Udacity.